Explore the accuracy and limitations of mathematical capabilities in ChatGPT-4. Discover how its architecture, training data, and the need for verification impact its mathematical responses. Learn why cross-checking is essential for critical calculations. Dive into examples illustrating its performance and potential errors.

The math in ChatGPT-4 are generally very accurate for a wide range of problems, from basic arithmetic to complex calculus and beyond. However, the model can sometimes make mistakes, especially with very complex or nuanced problems. It’s always a good idea to double-check important calculations.

Table of Contents

The accuracy of the math in ChatGPT-4

The accuracy of the math in ChatGPT-4, or any other AI model, depends on various factors, including the architecture of the model, the quality and quantity of the training data, and the specific mathematical tasks or questions being asked. In this article, we will delve into these factors to provide a detailed assessment of the accuracy of the math in ChatGPT-4.

1. Architecture and Training

ChatGPT is based on the GPT-3.5 architecture, which is a deep learning model. It utilizes a transformer architecture, which is known for its capabilities in handling various natural language processing tasks, including mathematical computations. The accuracy of the math in ChatGPT is largely influenced by its architecture and the training process.

During training, ChatGPT is exposed to a vast amount of text data from the internet. This data includes a wide range of mathematical information, from basic arithmetic to advanced calculus and beyond. The model learns patterns and associations from this data, including how to perform mathematical operations. However, it’s important to note that the model does not have a deep understanding of mathematics in the way humans do. Instead, it relies on statistical patterns it has learned.

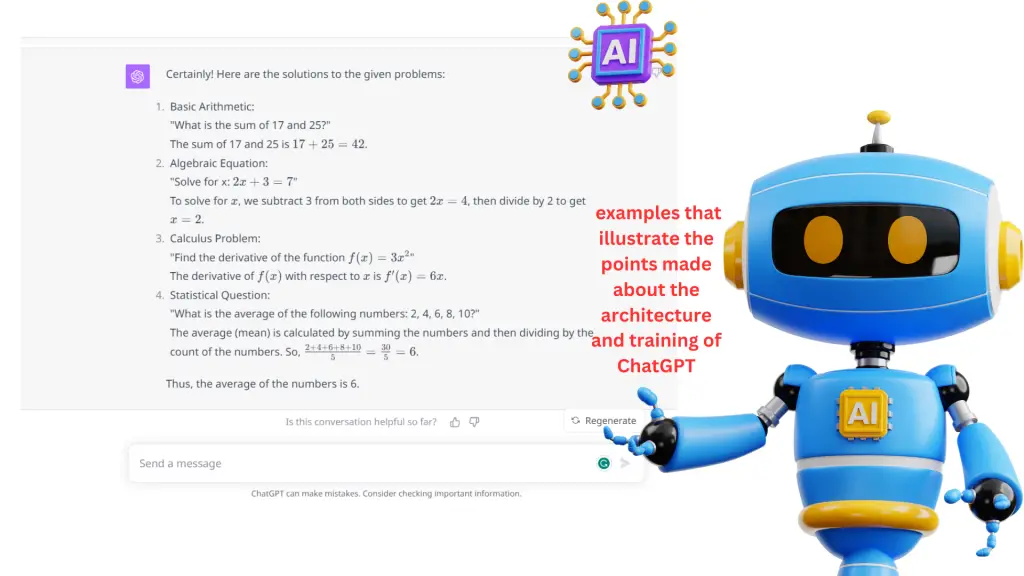

Certainly! Here are some examples that illustrate the points made about the architecture and training of ChatGPT:

- Basic Arithmetic: If you ask ChatGPT, “What is the sum of 17 and 25?” it will quickly use the patterns it learned during training to compute the answer, which is 42.

- Algebraic Equation: For a question like “Solve for x: 2x + 3 = 7,” ChatGPT will apply the learned algebraic rules and respond with “x = 2,” demonstrating its ability to handle algebraic manipulations.

- Calculus Problem: If posed with a calculus problem such as “Find the derivative of the function f(x) = 3x^2,” ChatGPT can correctly apply the differentiation rules it has learned to produce the derivative “f'(x) = 6x.”

- Statistical Question: When asked to calculate the mean of a data set, such as “What is the average of the following numbers: 2, 4, 6, 8, 10?” ChatGPT can use the pattern it has seen in statistical problems to calculate the mean, which in this case would be “6.”

These examples demonstrate ChatGPT’s ability to apply mathematical operations based on patterns it learned during training. The responses are computed based on the statistical associations found in the data it was trained on, rather than an intrinsic understanding of mathematical principles.

2. Quality and Quantity of Data

The accuracy of math in ChatGPT4 is also influenced by the quality and quantity of the training data. If the model has been exposed to a diverse and comprehensive dataset that covers a wide range of mathematical topics, it is more likely to perform well in mathematical tasks. However, the model can still make mistakes, especially if the training data contains errors or inconsistencies.

Additionally, if ChatGPT encounters a mathematical question or concept that is rare or not well-represented in its training data, it may struggle to provide an accurate answer. This is because the model’s responses are based on what it has seen during training, and it may not have encountered every possible mathematical scenario.

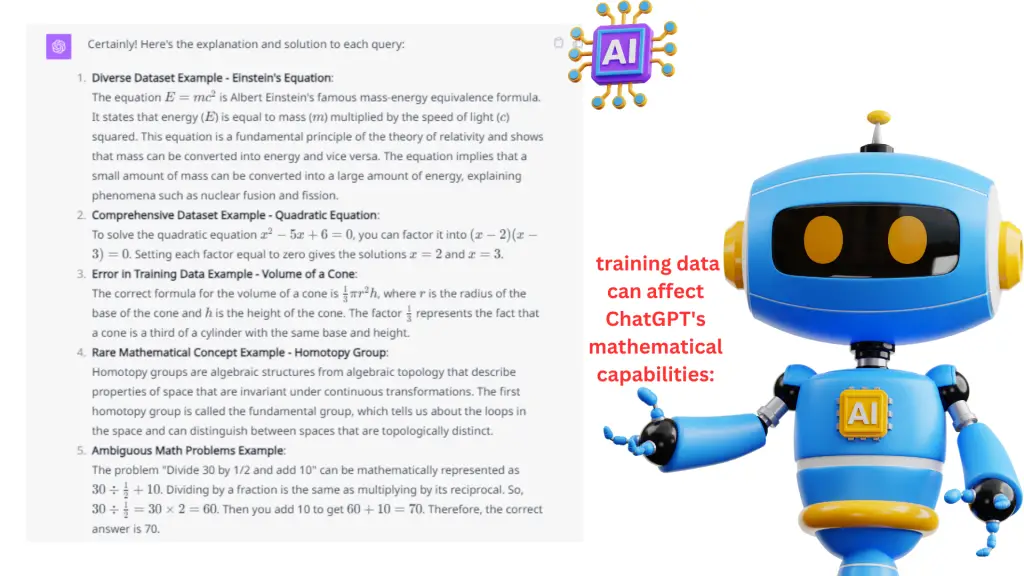

Here are examples that illustrate how the quality and quantity of training data can affect ChatGPT’s mathematical capabilities:

- Diverse Dataset Example: ChatGPT has been exposed to common equations used in physics, like E=mc2. When asked to explain this equation, ChatGPT can provide not only the meaning of the equation, due to its exposure to a variety of explanations during training, but it can also relate it to concepts like energy and mass.

- Comprehensive Dataset Example: In training, ChatGPT has likely seen many variations of the quadratic equation. If you ask ChatGPT to solve x2−5x+6=0 it can factor this to (x−2)(x−3)=0 and find the roots( x=2) and (x=3), showing its ability to apply well-represented mathematical procedures.

- Error in Training Data Example: If there were inaccuracies in the data concerning the formula for the volume of a cone, ChatGPT might occasionally reproduce such errors. For instance, the correct formula is 1/3πr2h, but if the model learned an incorrect version, it might answer incorrectly.

- Rare Mathematical Concept Example: Suppose there is a specialized area of mathematics not commonly discussed in the training data, like certain advanced topics in algebraic topology. If asked to explain “What is a homotopy group?”, ChatGPT might provide a vague or incorrect explanation due to limited exposure to this topic.

- Ambiguous Math Problems Example: If a math problem is ambiguously stated and could be interpreted in multiple ways, ChatGPT’s response may depend on which interpretation it has seen more frequently in the training data. For example, if the problem “Divide 30 by 1/2 and add 10” is posed, the model might provide the correct mathematical answer of 70 (since dividing by 1/2 is the same as multiplying by 2), or it might misunderstand and return an incorrect answer if that type of problem was not well-represented in the training.

These examples reflect the model’s reliance on the scope and accuracy of the data it was trained on to generate correct and sensible mathematical responses.

3. Limitations and Common Errors

ChatGPT, like all AI models, has limitations when it comes to mathematical accuracy:

- Lack of Context: The model may provide accurate mathematical answers, but it may not always understand the context of the question. For example, it might perform a mathematical operation correctly but fail to recognize that the question is about a real-world problem.

- Ambiguity: Math can sometimes involve ambiguity, especially in word problems. ChatGPT may misinterpret ambiguous questions and provide incorrect answers.

- Errors in Training Data: If the training data contains mathematical errors or incorrect information, ChatGPT may learn and replicate those errors.

- Complex Concepts: While ChatGPT can handle many mathematical concepts, it may struggle with highly specialized or advanced mathematics that require deep understanding and problem-solving skills.

- Numerical Precision: The model operates with finite numerical precision, so it may round numbers or make approximations in its calculations.

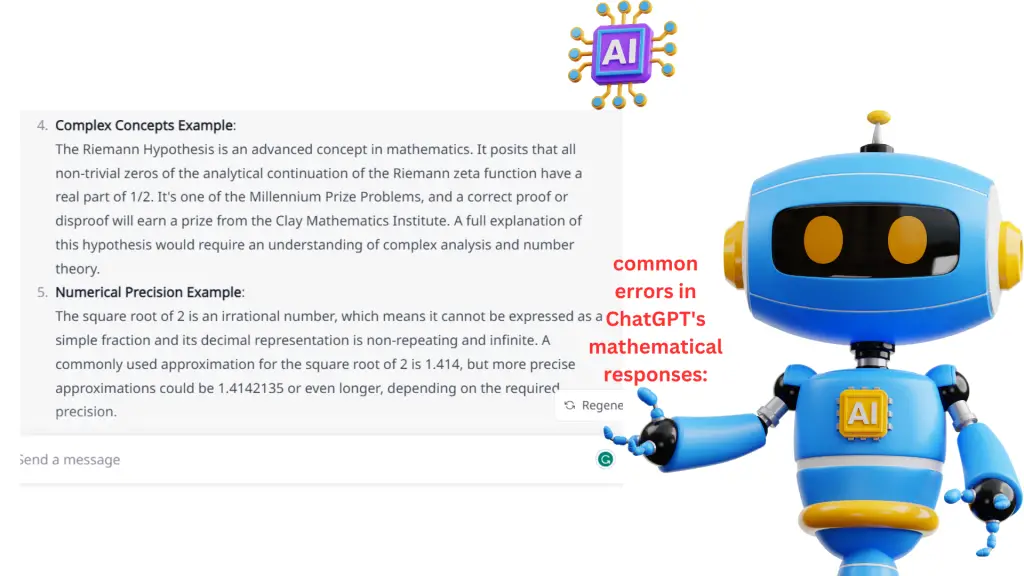

Certainly, here are examples that illustrate the limitations and common errors in ChatGPT’s mathematical responses:

- Lack of Context Example: Question: “If a car travels at 60 miles per hour, how long will it take to cover 120 miles?” Response: ChatGPT might correctly calculate the time as 2 hours, but it may not grasp that this is a calculation related to a real-world scenario involving a car journey.

- Ambiguity Example: Question: “A plane is flying at 500 miles per hour. How far will it travel in 2 hours?” Response: ChatGPT might provide an accurate answer of 1000 miles, but if the question were phrased less clearly or had multiple interpretations, the model could misinterpret it.

- Errors in Training Data Example: If ChatGPT’s training data contained an incorrect formula for the area of a triangle, such as “Area = (base * height) / 2″ instead of the correct “Area = (base * height) / 2,” the model might occasionally provide incorrect answers when asked about triangles.

- Complex Concepts Example: Question: “Explain the Riemann Hypothesis.” Response: ChatGPT might provide a vague or incomplete explanation or even misconceptions about this advanced mathematical concept, as it lacks the deep understanding required for such complex topics.

- Numerical Precision Example: Question: “What is the square root of 2?” Response: ChatGPT might provide an approximation like “1.414” instead of the precise, infinite decimal representation of the square root of 2, due to its finite numerical precision.

These examples highlight situations where ChatGPT’s mathematical capabilities may fall short, either due to context misunderstanding, ambiguity, training data issues, limitations in handling complex concepts, or numerical approximations.

4. Verification and Cross-Checking

Given the potential for errors, it’s essential to use ChatGPT’s mathematical responses as a reference rather than the final authority. For critical calculations or important mathematical tasks, it is advisable to verify and cross-check the answers using trusted sources or dedicated mathematical software.

Certainly, here are some examples that emphasize the importance of verification and cross-checking when using ChatGPT’s mathematical responses:

- Financial Calculations Example: Suppose you are calculating the interest on a significant loan or investment. Before making financial decisions based on ChatGPT’s calculations, it’s crucial to cross-check the results with trusted financial tools or consult a financial expert to ensure accuracy.

- Medication Dosage Example: If you need to determine the correct dosage of medication based on weight and other factors, it’s essential to verify ChatGPT’s calculations with a medical professional or trusted medical references to ensure patient safety.

- Engineering Design Example: In engineering design, precise mathematical calculations are critical. Engineers often use specialized software or consult colleagues to verify complex calculations, such as structural load analysis, before proceeding with a project.

- Scientific Research Example: Researchers conducting experiments or simulations in scientific fields should double-check any mathematical models or equations provided by ChatGPT against established scientific literature or collaborate with peers to validate their findings.

- Academic Work Example: Students working on assignments, research papers, or exams should not solely rely on ChatGPT’s answers for critical mathematical problems. It’s advisable to verify solutions through textbooks, class notes, or discussions with instructors.

- Architectural Measurements Example: Architects and builders should independently verify calculations for dimensions, materials, and structural integrity, especially when working on construction projects where errors can have significant consequences.

In all these scenarios, the potential consequences of relying solely on ChatGPT’s mathematical responses can be significant. To ensure accuracy and make informed decisions, it’s prudent to verify and cross-check calculations using trusted sources, domain-specific tools, or consultation with experts when necessary.

Conclusion – Accurate is the math in ChatGPT-4

In conclusion, the accuracy of the math in ChatGPT-4 is influenced by its architecture, training data, and inherent limitations. While it can provide accurate answers to a wide range of mathematical questions, users should exercise caution, especially for complex or context-dependent mathematical tasks. It is always a good practice to verify important calculations independently to ensure accuracy. AI models like ChatGPT are powerful tools, but they are not infallible and should be used judiciously.